Macquarie Bank is treating its foray into the Google cloud platform as a “second chance” where it can explore new methods of automated infrastructure provisioning and management.

CTO for banking and financial services Jason O'Connell told the All Day DevOps conference that the bank is using Kubernetes to manage its Google cloud environment.

“Last year in Macquarie we set up our second public cloud, which is Google cloud,” O’Connell said.

“This gave us the opportunity to look at a different way of managing the cloud, rethinking what we’re doing with the cloud.

“Because it was our second chance, our second cloud, we could do things differently.

“We have been running Kubernetes in production since 2017 so we’re relatively early users of Kubernetes for a lot of significant workloads around our digital banking offering, and because of that the team was very skilled and experienced at running Kubernetes itself and running workloads there.

“So when we heard about a new way of managing the cloud where we could use Kubernetes - and Kubernetes Operators - to manage the cloud itself and do other automation, we thought this would be an amazingly different way of doing things.”

Macquarie first disclosed this operating model at a Google cloud summit in September, though the All Day Devops presentation provided substantially more detail on the effort.

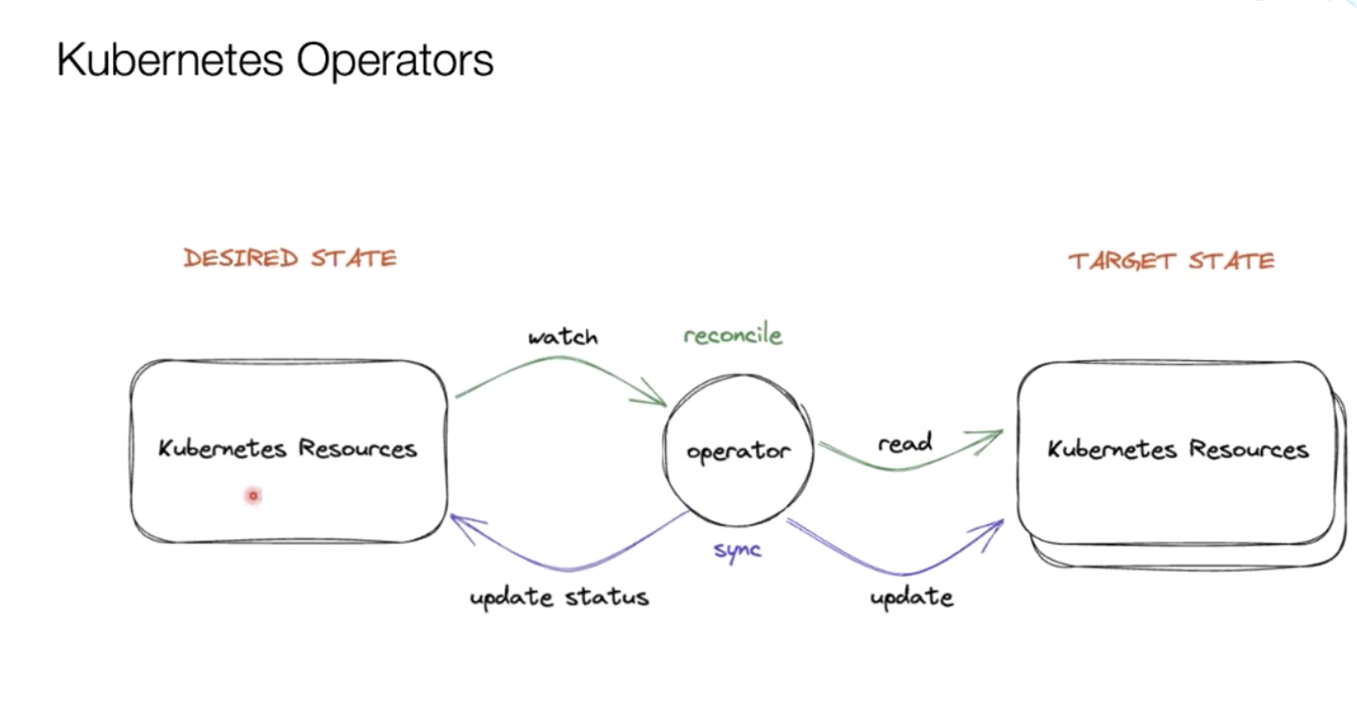

Operators, using one definition, are bits of code that “act like an extension of [an] engineering team, watching over a Kubernetes environment ... using its current state to make decisions in real time.”

O’Connell said that the concept of using Kubernetes Operators to manage cloud environments had grown in prominence in recent years.

“I think you’re going to hear a lot more about this sort of model in the future,” he said.

“You’ll notice that AWS now has ACK [AWS controllers for Kubernetes] which allows you to do the same thing that I was talking about with Google, and Crossplane.io is another service that offers the ability to manage cloud via operators as well.”

Macquarie uses a number of operators together to spin up the resources needed to onboard an application or workload into Google cloud.

The operators sit between Git, where the “desired state” of infrastructure needed for an application or workload is described in code, and Google cloud, where the “target state” or actual state exists.

They constantly poll for ‘events’ that would indicate a change on either side, and then attempt to reconcile the change, such that it is reflected in both the desired and target state.

“What we want is that when we create a desired state, an event is triggered to our operator automatically in Kubernetes, the operator’s watching those changes, it’s going to read the target state and reconcile the two, then it would update the target state to make them in sync and return a status.” O’Connell said.

“In this manner we’re constantly in sync when we’re changing the desired state.

“The reverse is also true, so we will watch the target state, read the desired state and then reconcile and sync the two.

“So if, somehow, I managed to delete something from Google Cloud [in the target state], but it’s in my desired state, the reconciliation will happen off the [change] event and it will actually create [that asset] again, because the operator must keep everything in sync continuously.”

The idea, O’Connell said, is to avoid configuration drift, where the desired state and target state no longer match due to a change being made in one but not the other.

O’Connell said configuration drift can be a challenge when using existing pipeline-based models for cloud resource deployment.

“What we really want to do is move towards a world where what is in Git is exactly what is in production, and I can check Git and I know that’s what’s in production,” he said.

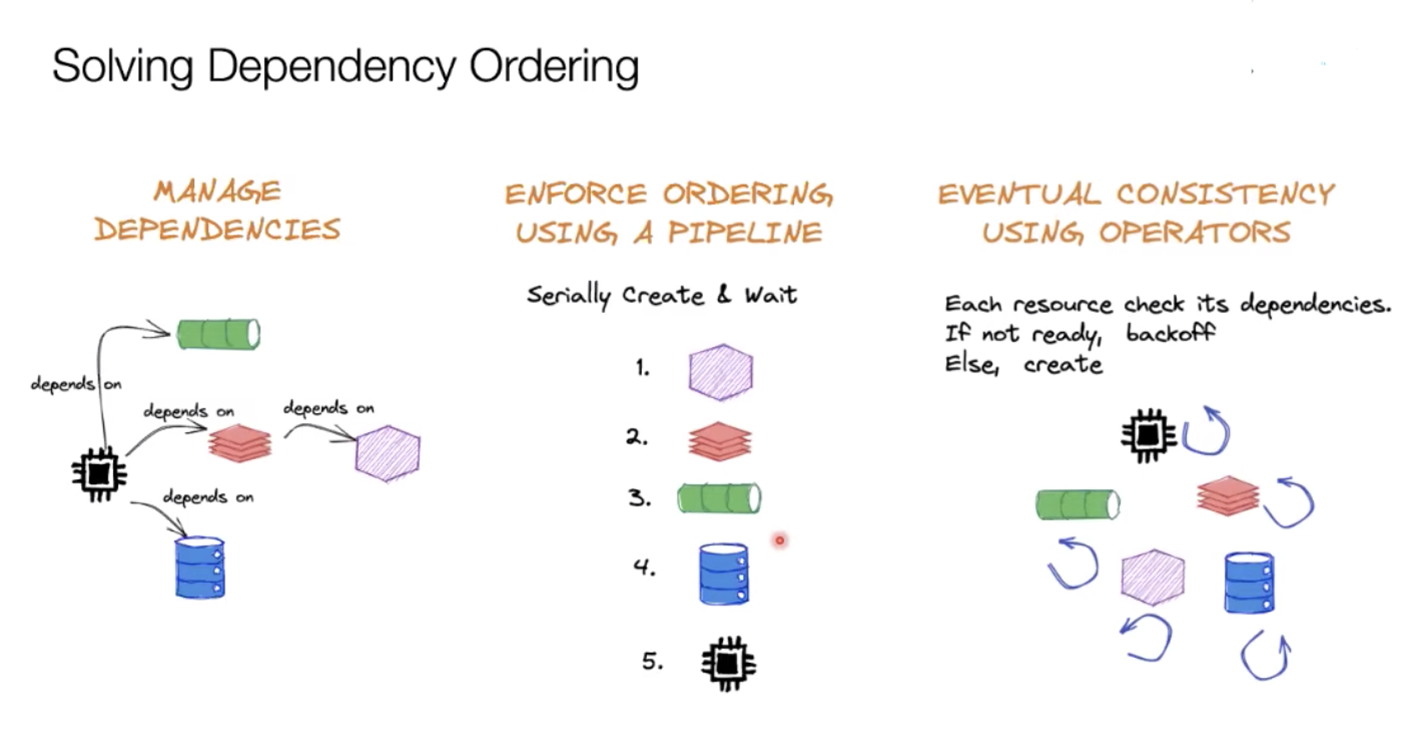

If what is being deployed to the cloud has certain dependencies - that is, it relies on the presence of other pieces of software or resources to function, the operator checks if these dependencies are live and operating correctly first, before spinning up the resource it has been assiged to do.

O’Connell said this solved the problem of ordering of deployments where one element depended on those preceding it being live and operational first.

“If something isn’t ready, [the operator] tries again and tries again and [then] slowly backs off,” O’Connell said.

“When we build operators to manage Google Cloud or anything else, we have to build in the same context - that we attempt to do something, if it doesn’t work we update the status, we try again and then we back off trying again.”

O’Connell said that eventually the operators would deploy the right resources and the ordering would become apparent.

“Eventually after you’ve created all these resources, it’ll work itself out,” he said.

“What we’re really building is somewhat like microservices - these small operators that each have their specialist task to do from an automation perspective, whether that’s building a Git repo, managing Google Cloud resources, or managing a service like Elasticsearch.

“Each operator has its own aim, and then we compose those operators with higher-order operators which then do more automation on top of that.

“And so you have all of these operators runnin g in this one control cluster that are constantly listening to events and resyncing and eventually working themselves out.”

There are some added safeguards to prevent unwanted additions to Git being automatically added to the production environment.

“We’ve got a GitOps bot that does checks on things like making sure you’ve got a release ticket, because remember when you merge something in Git that’s going to apply into production, [so] we need to do a bunch of change control here, making sure we’ve got [the right] approvals,” O’Connell said.

The bot could reject a pull request to ensure that Git and the cloud remained in sync.

The bank also validated resources by running checks inside of Kubernetes itself using the open policy agent (OPA) gatekeeper governance mechanism.

O’Connell said that the model of using Kubernetes to manage the GCP environment worked best for workloads that are provisioned once and then “could last for years” without needing to be touched or reconfigured.

He added that the model is “not just for managing Google cloud” and the bank uses it “for managing services outside of Google cloud as well.”